🎧 AI‑Powered Audio Interpretation

Explore how to manage audio channels and streaming settings in InterScribe.

- Author

- by Admin User

- 6 months ago

🤖 Overview

InterScribe’s AI voice interpretation converts translated captions into spoken audio using high‑quality synthetic voices. This lets attendees listen in their own language — ideal for hands‑free participation or supporting users with limited literacy.

🔬 Beta Notice: AI voice interpretation is currently in beta. Expect a few seconds of delay to ensure context-aware translation. Voice quality and responsiveness will continue to improve with usage and feedback.

🎛 Enabling AI Voice Channels

AI voice interpretation is included in Engage and Elevate plans. The Launch plan includes caption translation only.

To enable AI voice for a language:

- Open the Audio Interpretation & Translation section when creating or editing a session.

- Under AI Voice Interpretation, choose the language (e.g. Portuguese) for which you want to enable synthetic audio.

- Save the session.

When the session is live, attendees who select that language will see an Audio option in addition to captions.

➕ Adding More Voice Channels

Each session includes one AI voice channel by default. To offer additional AI voice languages (e.g. Spanish and French):

- You can purchase additional voice channels at a low hourly rate (typically $2–$3/hour).

- Request add-ons via your subscription settings or contact InterScribe Support.

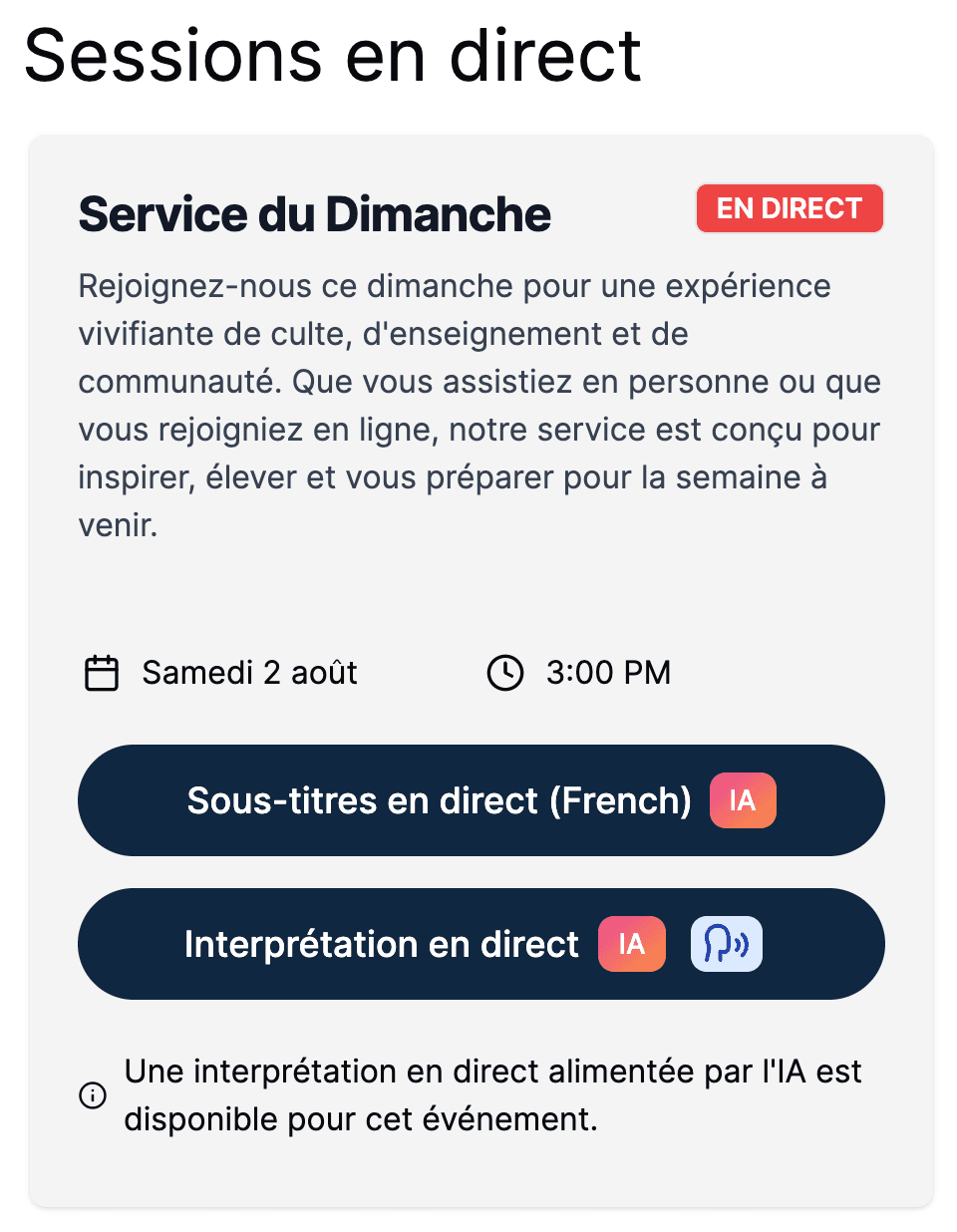

🎧 Attendee Experience

When joining a session with AI voice enabled:

- Attendees choose their language from the dropdown menu.

- If voice is available, an Audio toggle appears.

- Tapping it plays a synthetic voice reading the translated captions.

- A brief reminder suggests wearing headphones to avoid disrupting others.

- Audio volume is independent of the original stream — attendees can balance or mute either.

✅ Best Practices

💬 Add context & glossary AI voices are powered by your captions. Use the Context & Glossary tools to improve pronunciation, phrasing, and domain-specific accuracy.

🌍 Choose strategic languages AI voice is available for dozens of major languages. If a language isn’t supported, users can fall back to device-based TTS for audio playback.

📢 Let your audience know Promote the AI voice option during announcements or in printed materials. Some users prefer listening over reading — especially on mobile.

❓ FAQs

Is the AI voice natural-sounding? Yes — the system uses modern neural TTS models for human-like voices. While not identical to a live interpreter, they offer high clarity and consistency.

Is there a delay? Yes. Expect a short delay (5–15 seconds) to ensure high-quality, context-aware translation. This is separate from any normal streaming latency.

Can I select male/female voice? Some languages offer gender options. This feature may depend on your plan. Contact support to configure voice gender or inquire about future voice cloning options.

Can I mix AI and human interpreters? Absolutely. You can assign a human interpreter for one language (e.g. Spanish) and use AI voice for another (e.g. Portuguese). Attendees choose which to listen to.